Nvidia opens up chip-linking tech as AI demand surges

19 May 2025

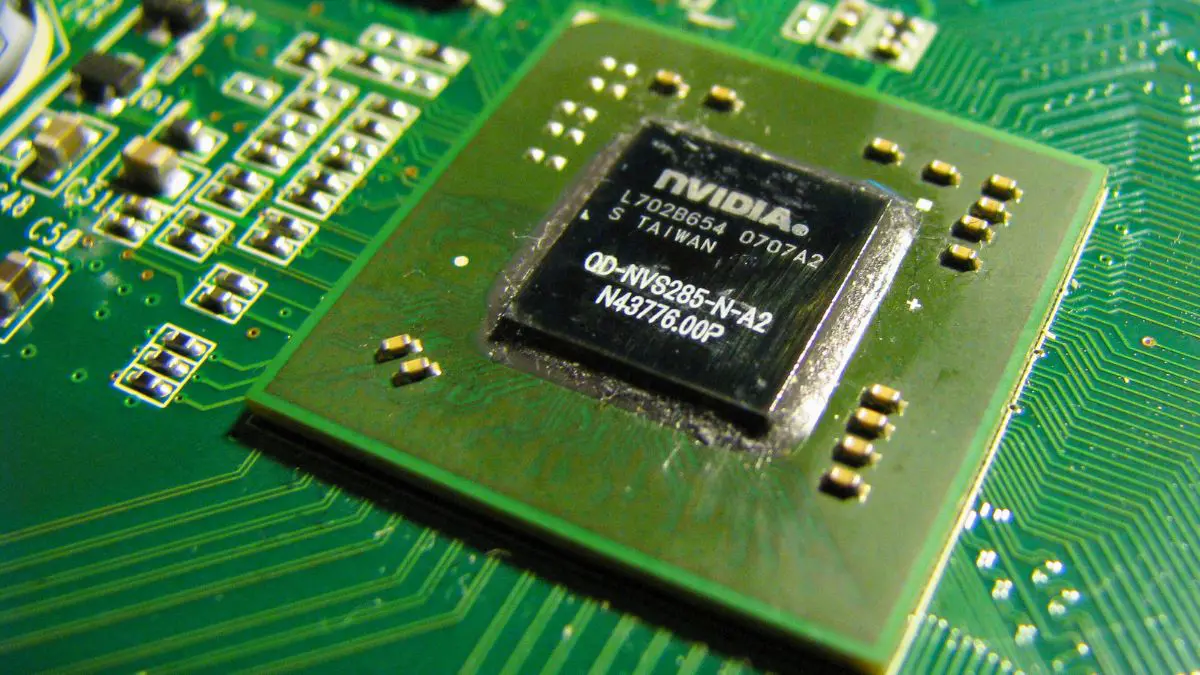

Nvidia is taking a significant step to extend its influence in the fast-evolving AI hardware space. On Monday, the company announced plans to sell its proprietary chip interconnect technology—NVLink Fusion—to other firms, enabling faster communication between AI chips. This move positions Nvidia not just as a chipmaker but as a key enabler in the broader AI hardware ecosystem.

The new NVLink Fusion will help chip designers link multiple processors more efficiently, a capability increasingly essential for training and running large-scale AI models. Semiconductor companies Marvell Technology and MediaTek are among the early adopters, signaling the technology's industry relevance and appeal. For Nvidia, opening up NVLink Fusion to external partners could translate into a broader role in shaping custom AI systems, beyond its own hardware stack.

NVLink isn’t new—it’s been used within Nvidia’s flagship chips, like the GB200, which pairs two Blackwell GPUs with a Grace CPU. What’s new is Nvidia’s decision to commercialize this interconnect technology. It reflects a broader industry trend: AI workloads are becoming so complex that multi-chip systems are now a necessity, not a luxury. Enabling high-speed data flow between chips is critical to meeting performance demands—and Nvidia wants to be the backbone of that infrastructure.

Nvidia doubles down in Taiwan

Nvidia CEO Jensen Huang announced the NVLink Fusion launch during a keynote at Computex in Taipei, a major technology trade show running from May 20–23. Huang also revealed that Nvidia will establish a new Taiwan headquarters in northern Taipei, a strategic move to strengthen ties in the region that plays a critical role in global chip manufacturing.

Huang reflected on the company’s transformation—from a graphics chip pioneer for gamers to the driving force behind today’s AI boom. Just a few years ago, Nvidia’s story revolved around GPUs. Now, it dominates the AI chip market, fueled by the explosive growth in demand sparked by applications like ChatGPT.

Also revealed during the keynote: Nvidia's upcoming desktop AI workstation, the DGX Spark, designed for AI researchers. The system is already in production and will hit the market within weeks, expanding Nvidia’s footprint beyond data centers into desktop-level development tools.

Looking ahead: a roadmap of AI chip innovation

During Nvidia’s March developer conference, Huang outlined a clear roadmap: after the current Blackwell Ultra chips launch later this year, the Rubin series will follow, with Feynman chips expected by 2028. The company is also designing CPUs that could run Microsoft Windows, leveraging technology from Arm Holdings, signaling Nvidia's intent to compete across an even broader computing landscape.

Computex 2025 marks more than a product showcase—it’s the first major in-person gathering of semiconductor executives in Asia since rising geopolitical tensions and U.S. trade policies prompted shifts in global supply chains. For Nvidia, this is an opportunity to solidify its leadership not just in chip performance, but in platform strategy.

Summary:

Nvidia has launched NVLink Fusion, a technology that boosts AI chip-to-chip communication, and will sell it to other chipmakers such as Marvell and MediaTek. The move positions Nvidia as a foundational player in AI infrastructure. CEO Jensen Huang also announced a new Taiwan HQ and previewed products like the DGX Spark desktop AI workstation. With its expanding chip roadmap and growing role in the AI ecosystem, Nvidia continues to shape the future of computing—far beyond its GPU roots.

Frequently Asked Questions (FAQs)

1. What is NVLink Fusion and why does it matter?

NVLink Fusion is Nvidia's latest chip interconnect technology designed to allow multiple processors to communicate rapidly and efficiently. It is essential for building large-scale AI systems that require fast data exchange between chips, such as those used in training or running generative AI models.

2. How does NVLink Fusion differ from previous NVLink versions?

While earlier versions of NVLink were primarily used within Nvidia's own hardware platforms, Fusion is designed for external adoption. This version allows third-party chipmakers to build their own AI systems using Nvidia’s high-speed interconnect framework, making it more modular and collaborative.

3. Which companies are adopting NVLink Fusion?

MediaTek and Marvell Technology are among the first companies to integrate NVLink Fusion into their custom chip development. This adoption signals strong industry interest in Nvidia’s ecosystem, potentially expanding its market reach.

4. What are the business implications of Nvidia selling NVLink Fusion to others?

By offering NVLink Fusion to outside chipmakers, Nvidia is positioning itself not just as a product provider but as an AI infrastructure standard-bearer. This strategy could lock more firms into its ecosystem, generate new revenue streams, and solidify Nvidia’s dominance in the AI supply chain.

5. What is the DGX Spark and who is it for?

DGX Spark is a desktop AI workstation tailored for researchers and developers working on AI models. It brings powerful AI computation to the desktop level, potentially democratizing access to high-performance AI tools for smaller labs, startups, and universities.

6. How does Nvidia’s chip roadmap affect the AI industry?

Nvidia’s planned releases—including Blackwell Ultra, Rubin, and Feynman chips—set a clear trajectory for the future of AI computing. These chips are designed to address increasingly complex AI workloads and could shape industry standards over the next several years.

7. Why is Nvidia building a new headquarters in Taiwan?

Taiwan plays a critical role in the semiconductor supply chain, housing key partners like TSMC. Establishing a headquarters in Taipei strengthens Nvidia’s regional presence and logistical alignment in the heart of the chip manufacturing ecosystem.

8. How does this announcement fit into the larger AI industry trend?

As AI models grow in size and complexity, there’s a growing need for modular, scalable, and high-speed computing systems. Nvidia’s announcements address this trend head-on, giving businesses the tools to build and run next-generation AI applications efficiently.