Cisco Unveils AI Networking Chip to Strengthen Position in Data Centre Boom

By Axel Miller | 10 Feb 2026

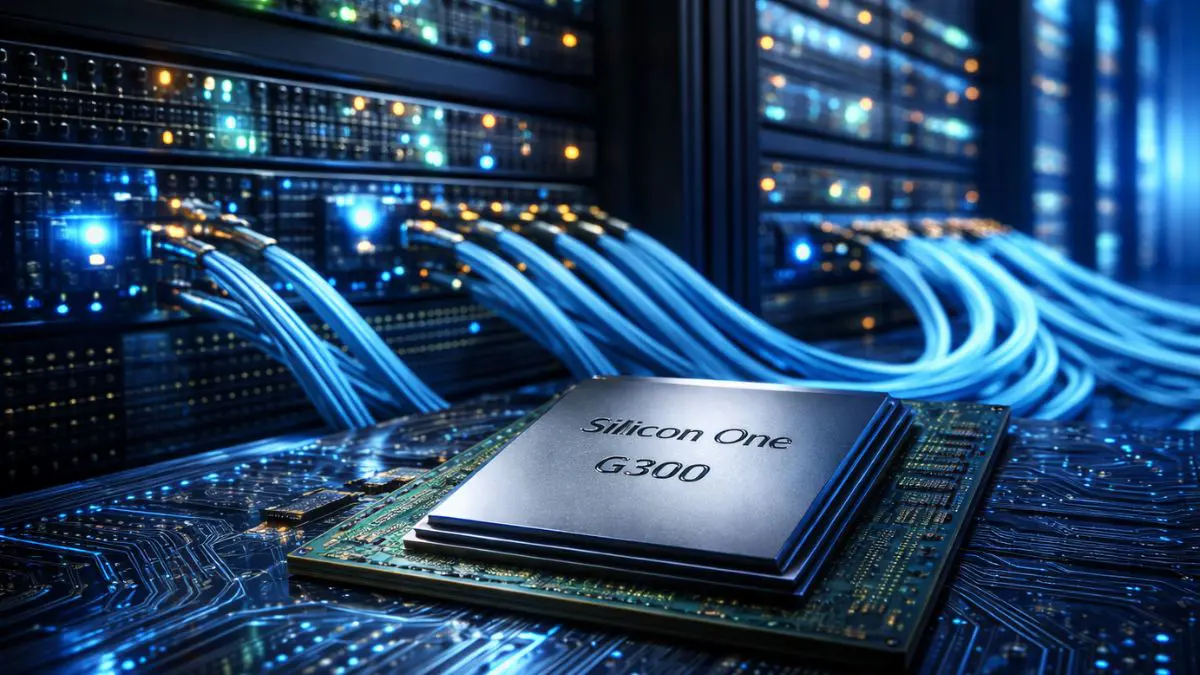

Cisco Systems has unveiled a new high-performance networking chip and router designed to accelerate data movement inside massive artificial intelligence data centres, positioning the company alongside rival infrastructure suppliers as AI-driven computing demand surges.

The new Silicon One G300 switch chip is engineered to handle the intense data traffic generated by large AI training and inference systems, where tens of thousands of high-performance processors must communicate with each other in real time. Cisco said the G300 is expected to be available in the second half of 2026.

Built for AI-Scale Traffic

As AI models grow larger and more complex, networking bottlenecks have become a major performance constraint. Unlike traditional enterprise data traffic, AI workloads can trigger sudden and intense bursts of data that overwhelm conventional network systems.

Cisco said the G300, built using Taiwan Semiconductor Manufacturing Co.’s advanced 3-nanometre process, incorporates features that act like “shock absorbers” for networks, helping prevent congestion when traffic spikes occur — a frequent issue in large AI clusters.

According to Martin Lund, executive vice president of Cisco’s Common Hardware Group, the system can detect slowdowns and automatically reroute traffic within microseconds, helping maintain stable performance across vast computing environments.

Performance and Efficiency Gains

Cisco says its internal performance projections suggest that improved networking efficiency enabled by the G300 could help some AI computing workloads complete up to 28% faster — not through faster processors but by reducing communication delays within the network fabric.

“This happens when you have tens of thousands of connections — it happens quite regularly,” Lund said in a statement. “We focus on total end-to-end efficiency of the network.”

The emphasis on networking reflects a broader industry trend in which data moving between chips is increasingly as critical as raw processing power inside chips.

A Growing Battlefield in AI Infrastructure

The announcement highlights how networking has become a strategic front in the AI boom. While Nvidia remains the dominant supplier of AI processors, it has increasingly moved into networking integration, embedding custom network interfaces into its latest AI platforms.

Broadcom is also aggressively targeting the AI networking opportunity with its Tomahawk series of high-capacity switch chips, widely used in hyperscale data centres.

With analysts projecting hundreds of billions of dollars in AI-related data centre investment over the coming years, networking vendors are racing to ensure their hardware becomes the backbone of next-generation AI clusters.

Cisco’s launch signals its ambition to capture a larger share of this expanding market by positioning itself not just as a traditional networking supplier, but as a key infrastructure enabler for large-scale AI deployments.

Why This Matters

The rapid expansion of artificial intelligence is shifting the balance of power inside data centres, where networking performance is becoming as critical as processing power. As AI clusters scale to tens or hundreds of thousands of chips, delays in data movement can significantly erode system efficiency and increase operating costs.

Cisco’s entry into AI-optimized networking reflects a broader industry pivot: infrastructure suppliers are racing to control not just AI processors, but the connective fabric that binds them together. For hyperscale cloud providers investing billions of dollars in AI capacity, improvements in networking efficiency can translate directly into faster model training, lower energy consumption and better returns on capital.

The move also signals intensifying competition in AI infrastructure beyond chips alone. As Nvidia and Broadcom expand deeper into networking, Cisco’s push underscores how the next phase of the AI boom will be defined by end-to-end system optimization, not just raw computing power.

FAQs

Q1. What did Cisco announce?

Cisco unveiled its new Silicon One G300 AI-focused networking chip and an accompanying high-performance router designed to improve data transfer speeds inside large AI data centres.

Q2. Why is networking important for AI systems?

AI models rely on thousands of processors working together. If the network connecting those processors slows down, overall system performance can degrade. Faster, more efficient networking reduces communication delays and improves throughput.

Q3. How does Cisco’s new chip improve performance?

The G300 includes advanced traffic-management features that automatically reroute data during congestion. Cisco says early projections show some AI workload completion times could improve by up to 28%.

Q4. Who are Cisco’s main competitors in this space?

Cisco is competing with Nvidia, which integrates networking functions with its AI accelerators, and Broadcom, whose Tomahawk chips are widely used in data centre networks.

Q5. When will the Silicon One G300 be available?

Cisco expects the chip to be available to customers in the second half of 2026.

Q6. Why does this matter for the AI industry?

As AI infrastructure spending accelerates, networking performance is becoming a key determinant of system efficiency. Vendors that master high-speed networking for AI stand to gain significant market share.